Discover how Azure Kinect can optimize the packaging process for shipments

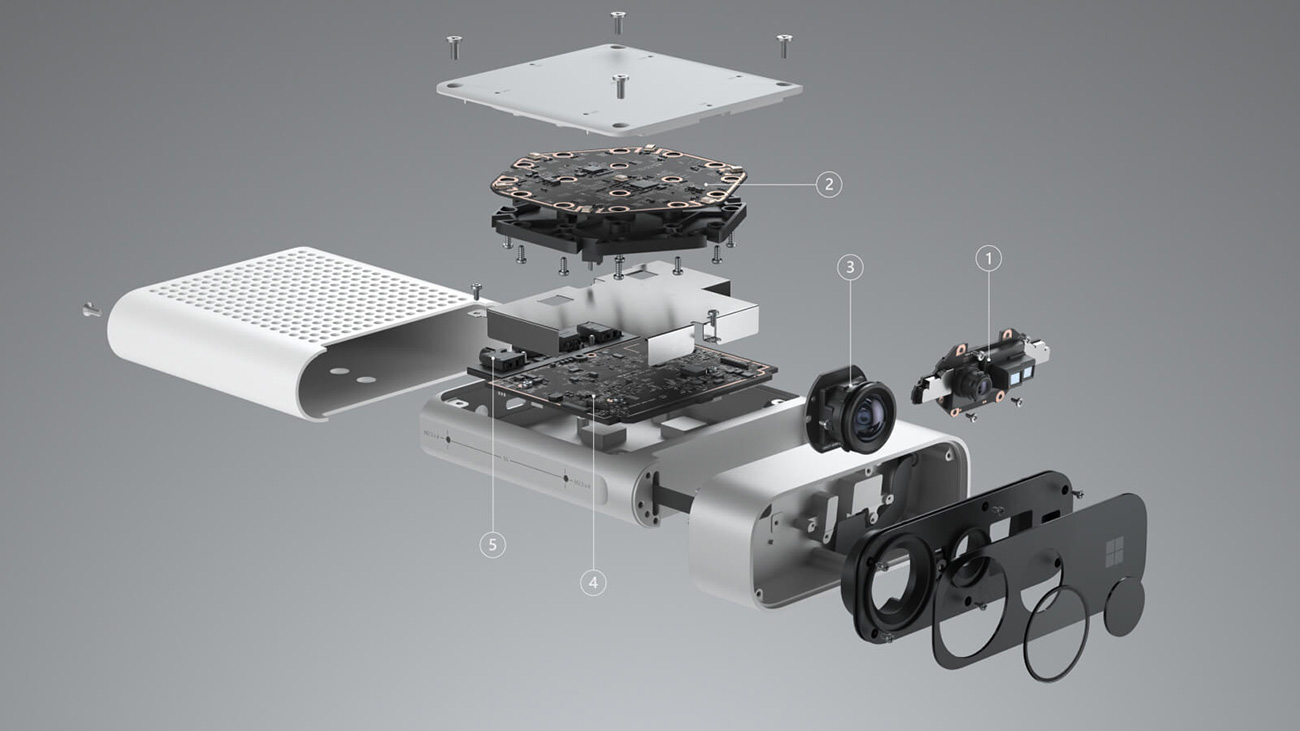

Azure Kinect is an advanced Microsoft device for spatial computing, offering a color camera, a depth camera, motion sensors, and microphones.

What happens if this technology is used in any production line of a company?

How? We develop custom software to correctly manage the work on the packaging line, using the Kinect functionalities. The application optimizes tasks by assisting the operators and providing consolidated and accurate management information. As a result, many labeling errors and associated costs are avoided.

The problem we needed to solve

Our client manages thousands of orders daily that must be prepared in their warehouses and dispatched to the carriers.

When labeling the box of the prepared order, you need to record its weight and size. The first is solved with a scale, something economically affordable.

However, the measurement of the box is more complex and the solutions on the market are often expensive. Taking advantage of the functionality of Azure Kinect, our development team implemented an automatic identifier, which detects the size of the boxes instantly and accurately.

Sending the correct data ensures that the correct labels are obtained, avoiding problems with carriers. In addition, it reduces the cost of processing post-shipment price adjustments.

What Kinect functionalities do we use?

As we already mentioned, the device offers a color camera, a depth camera, motion sensors and microphones.

For this project, the last two functionalities were not relevant. Both cameras could have been used, but during the survey and analysis stage, the use of the color image was discarded, since it meant:

- Risk of coincidences between the box and the table.

- Difficulties with patterns altered by labels, adhesive tapes, etc.

- High sensitivity to light.

- Only X-Y (length width) plane information, not Z (height)

- Absence of real-world measurements.

So, the best thing to do was to take and work with the in-depth image functionality:

- Here the appearance of the box is indifferent

- There is no room for confusion. If the box exists, that implies that it has volume

- It allows to obtain information of the complete volume X, Y, Z.

Configuration process and results

Once this was considered, we worked on configuring an application so that the device establishes its volume for each object and with it, its own identification.

Through the development of mathematical logics, and the support from the AForge library, ways to identify were established: height, sensor-box distance, object vertices, height, FOV Kinect, size of the captured image.

All of that, processed correctly, allows the ability to instantly identify each box that passes through the packaging line.

As a result, the client was able to optimize its packaging line to the maximum, by automating the identification of the size of the box and reducing errors in the labeling of the packages.

With all of that, not only did he speed up his order processing times, but also reduced carrier issues and the time and expenses to correct.

About Azure Kinect

As Microsoft describes it, Azure Kinect is a state-of-the-art spatial computing development kit with sophisticated artificial vision and voice models, advanced AI sensors, and a wide variety of high-potential SDKs that can be connected to Azure Cognitive Services.

Thanks to the use of Azure Kinect, spatial data and the context can be taken advantage of in order to improve operational security for businesses, increase performance, improve bottom-line results, and revolutionize the customer experience.

Offers:

- 1 MP depth sensor with wide and narrow field of view options to optimize for an application.

- 7-microphone matrix to capture distant sounds and voice.

- 12MP RGB video camera for additional color sequence in line with depth sequence.

- Accelerometer and gyroscope (IMU) for sensor orientation and spatial tracking.

- External sync connections to easily sync sensor sequences from multiple Kinect devices simultaneously.

Reinvent the future of your company with projects like this one. We would like to join you: contáctanos

Vicente Pérez López

Senior Developer at Virtusway

Discover how we can help you

Please leave us your question and one of our assistants will contact you asap.